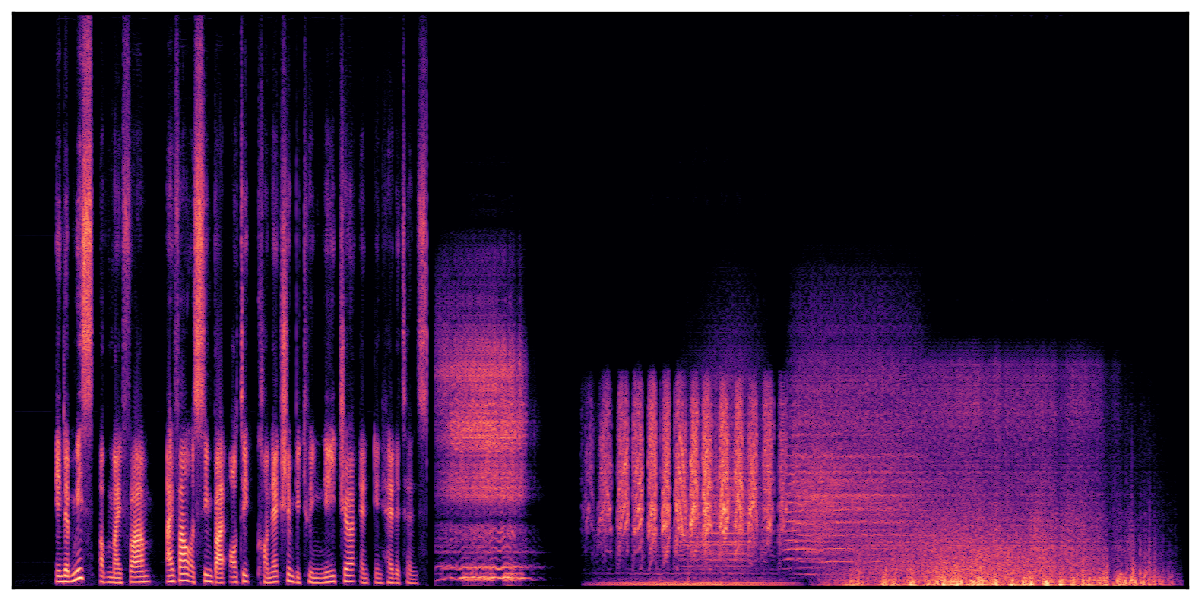

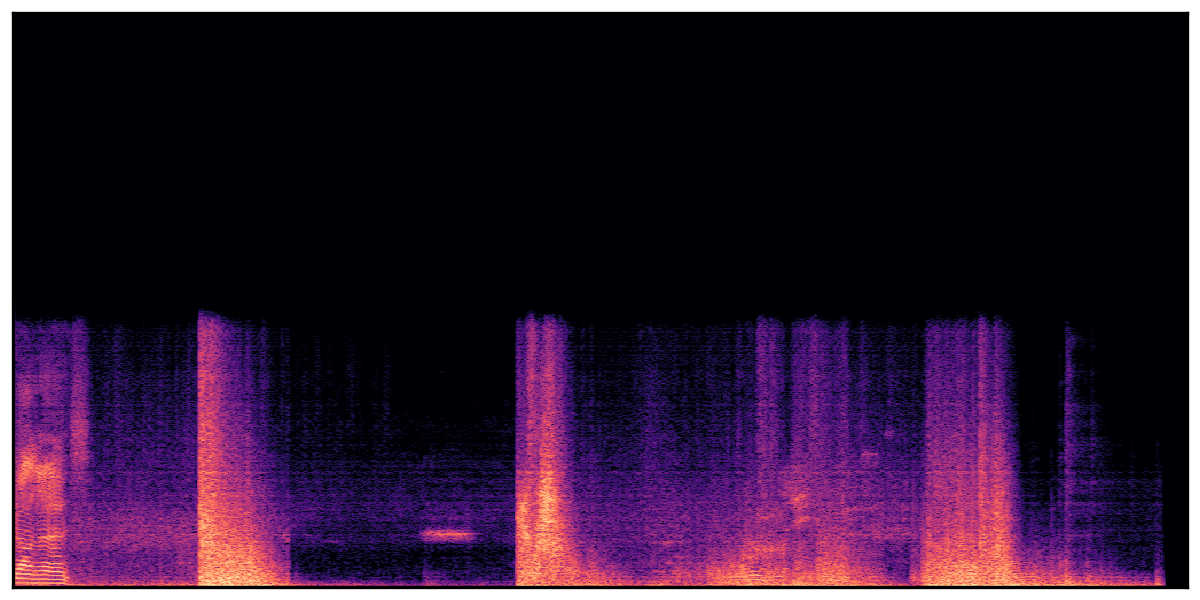

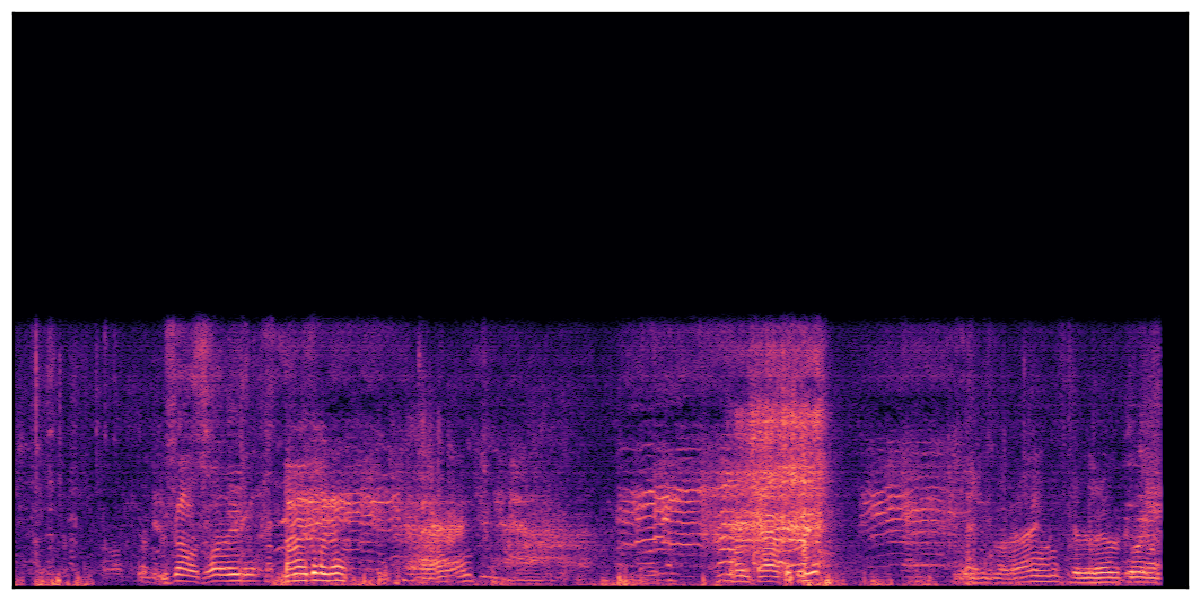

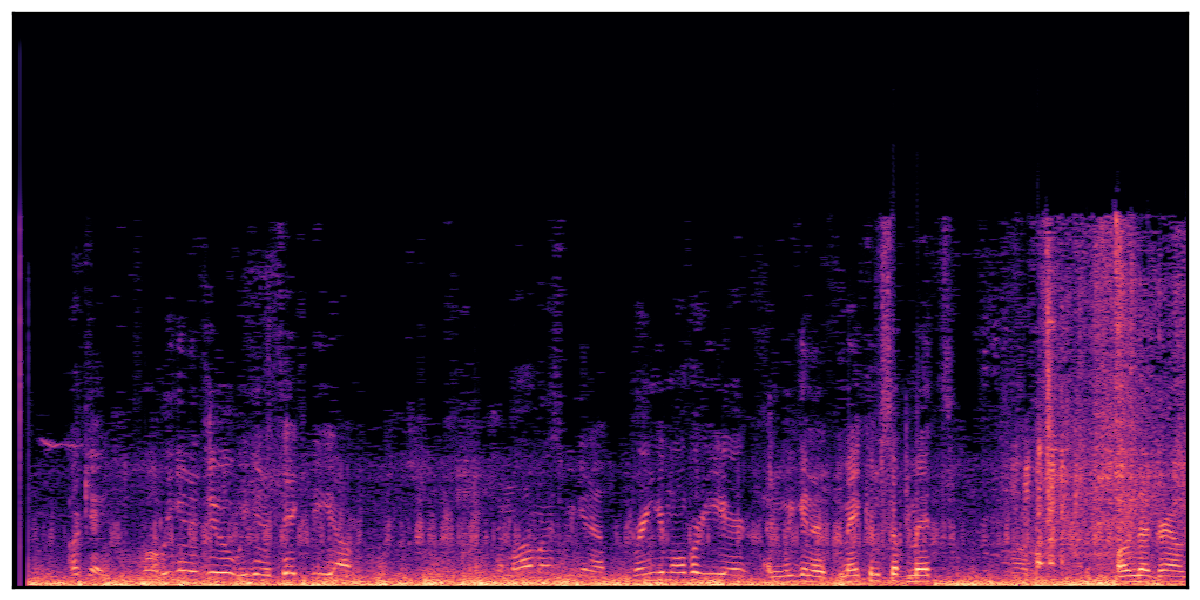

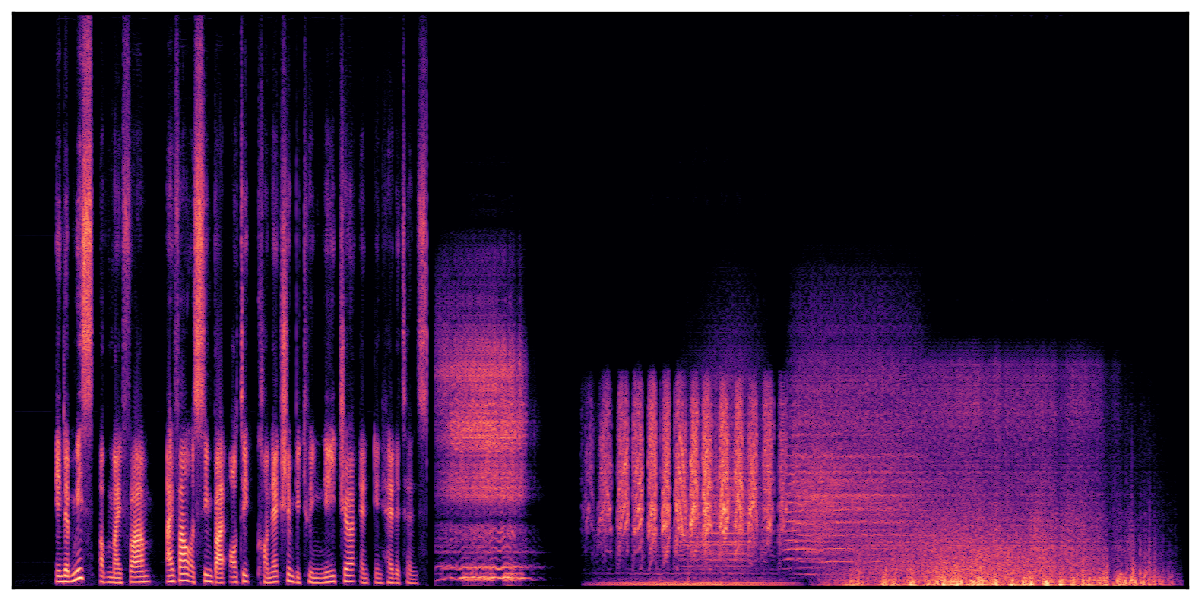

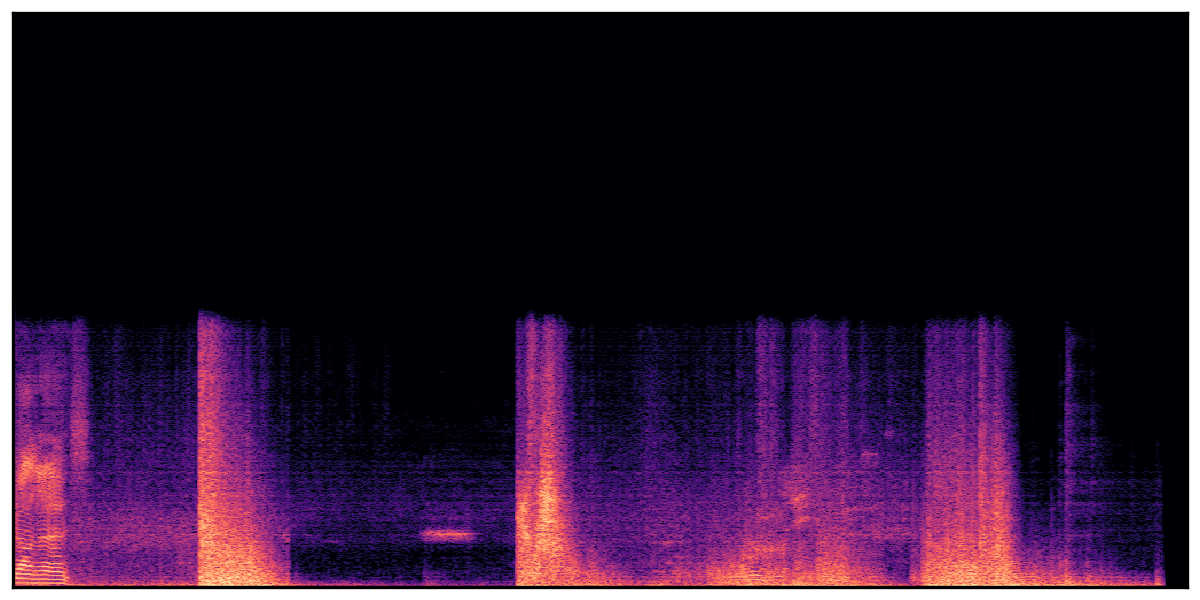

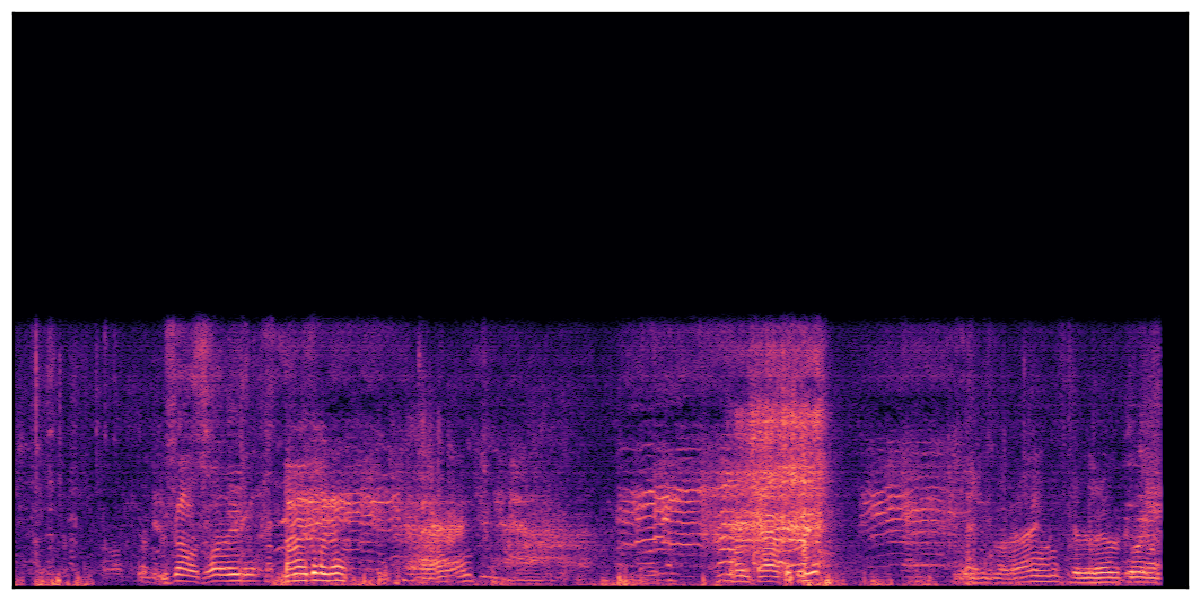

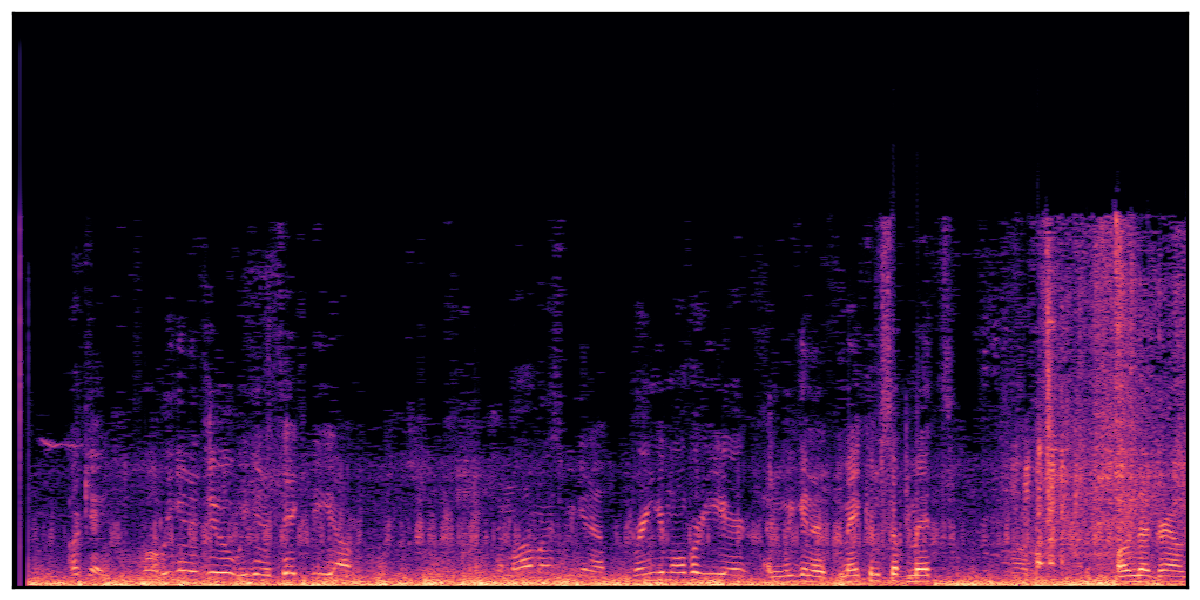

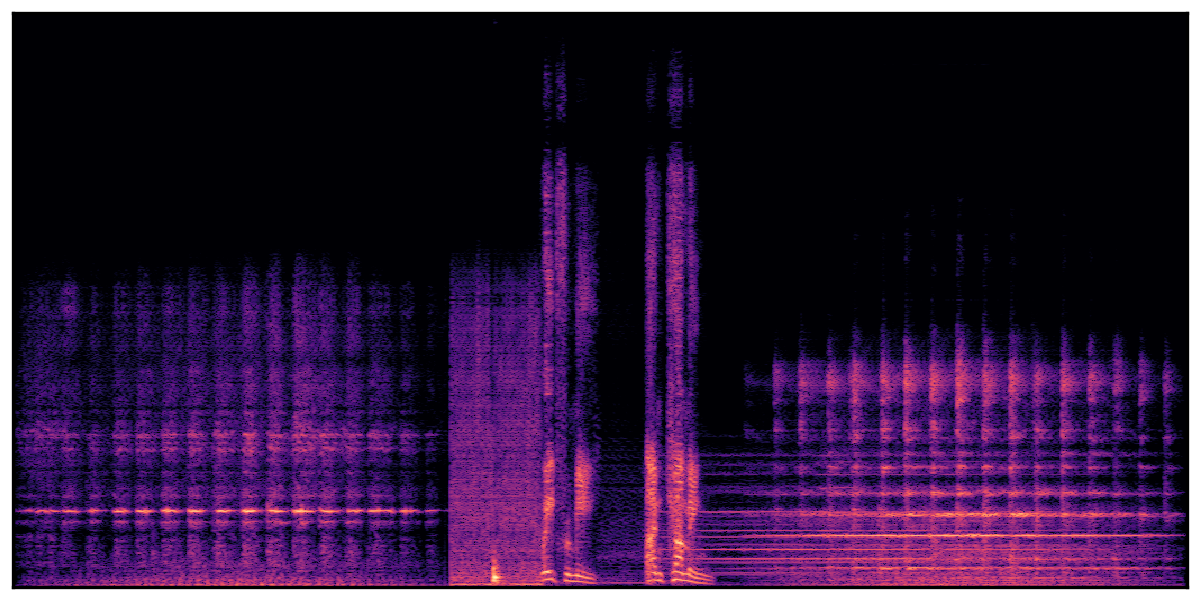

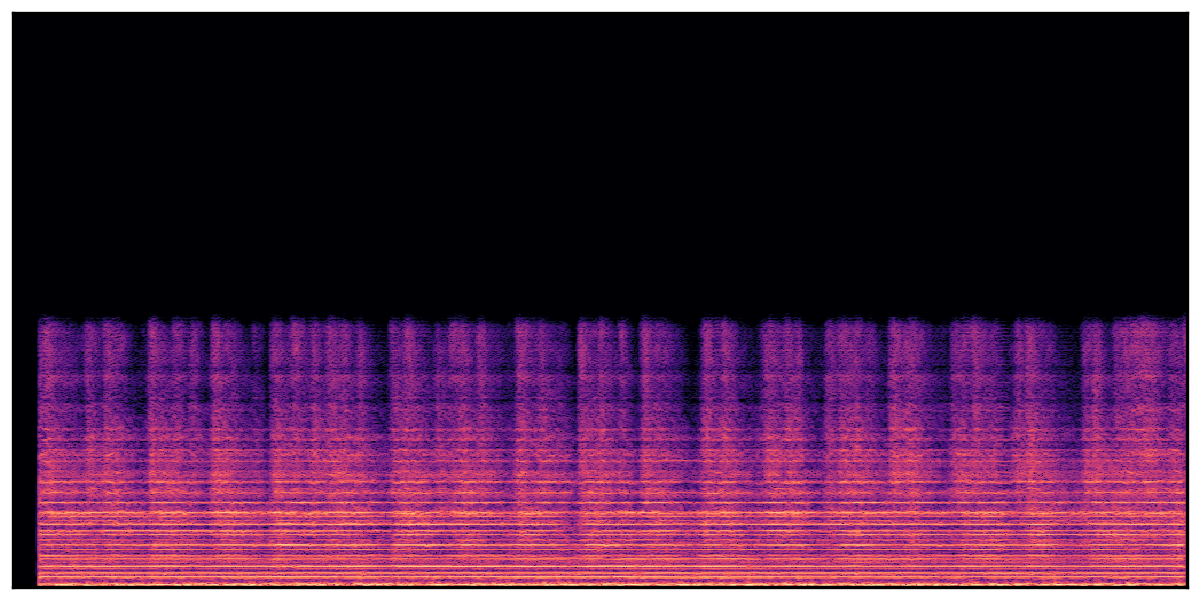

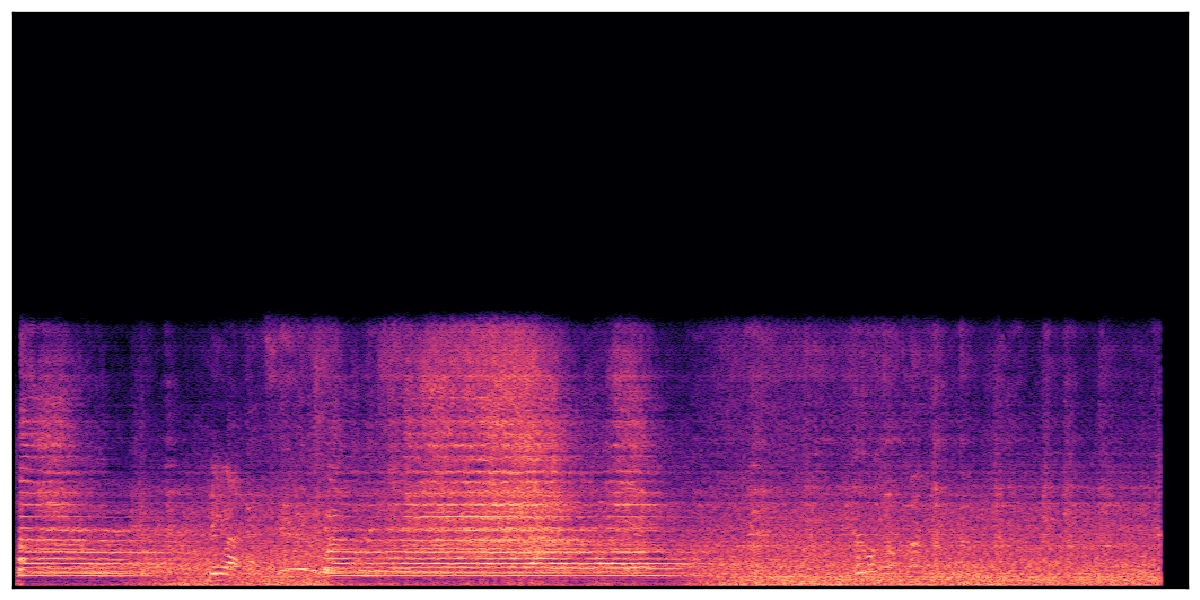

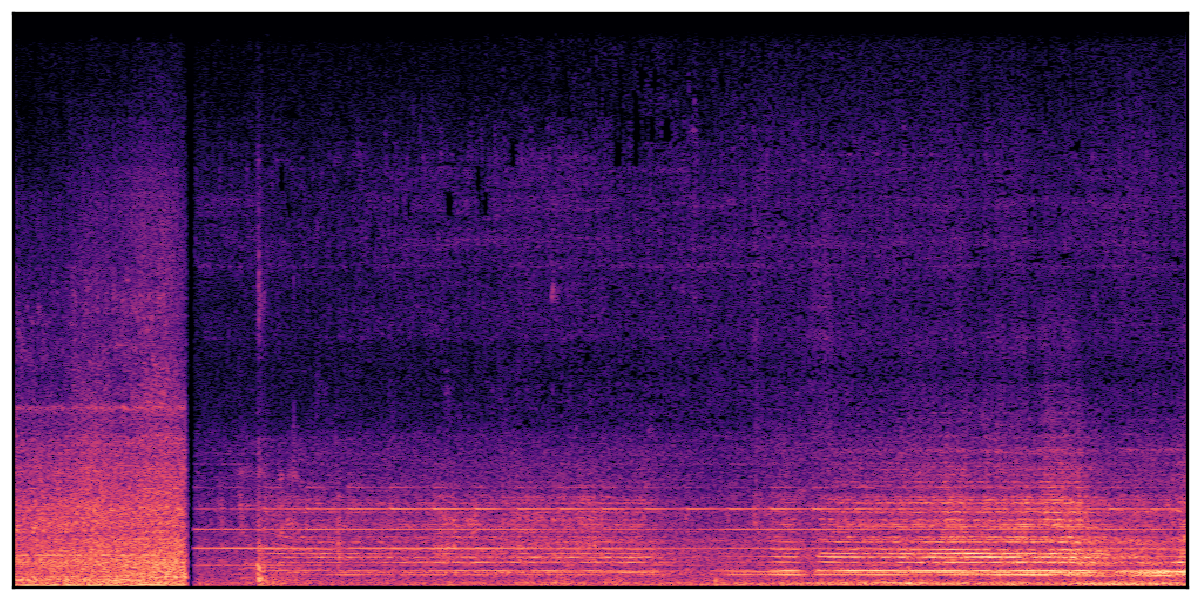

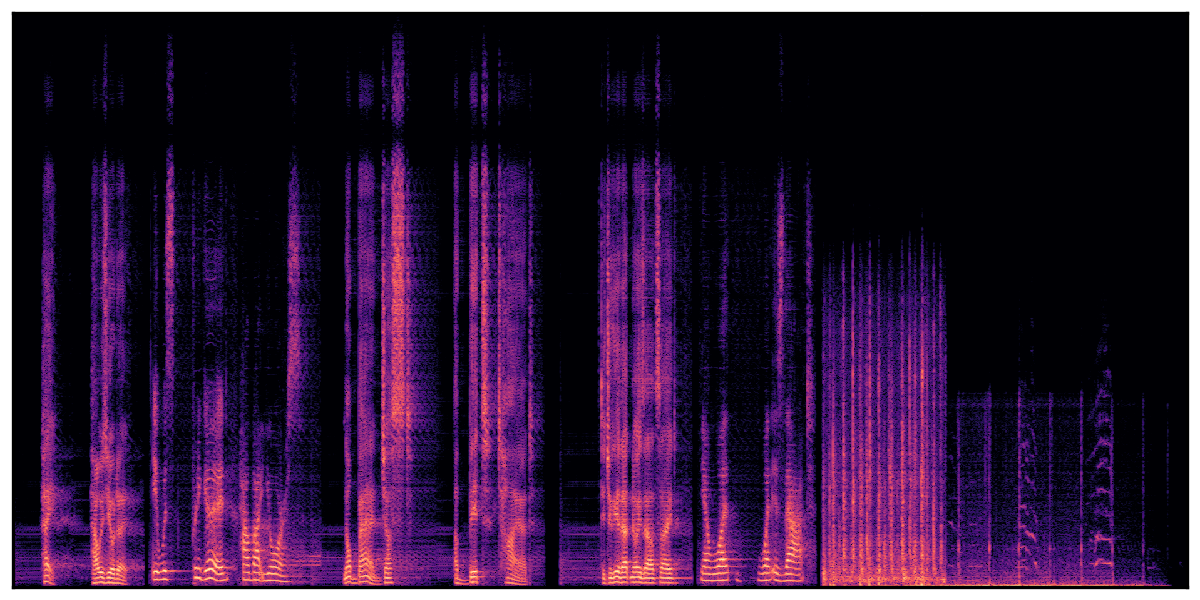

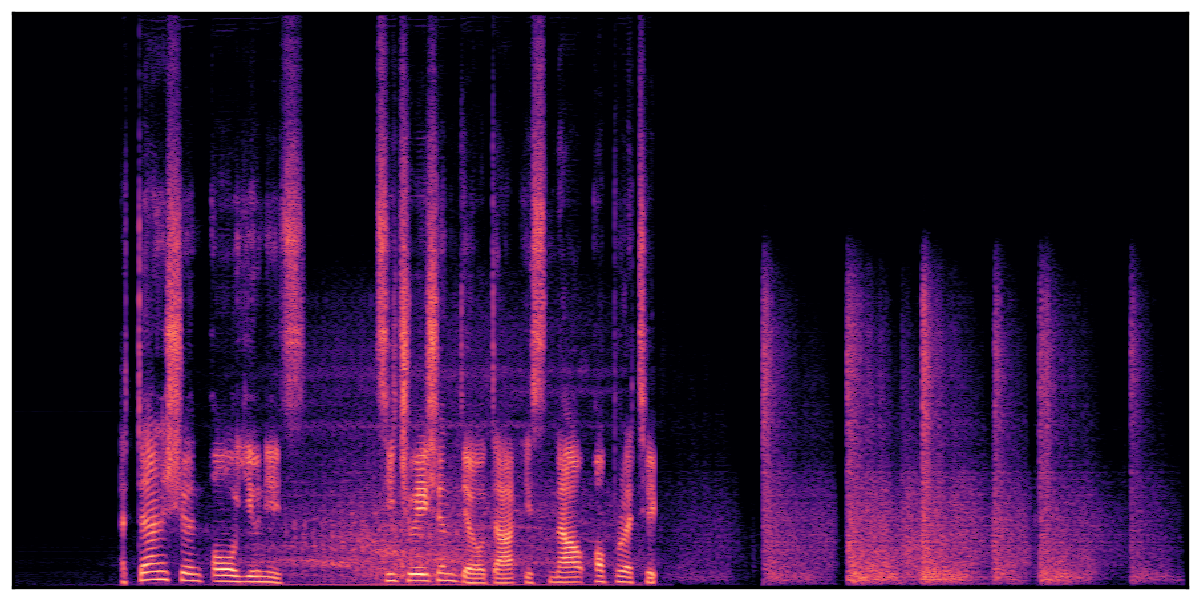

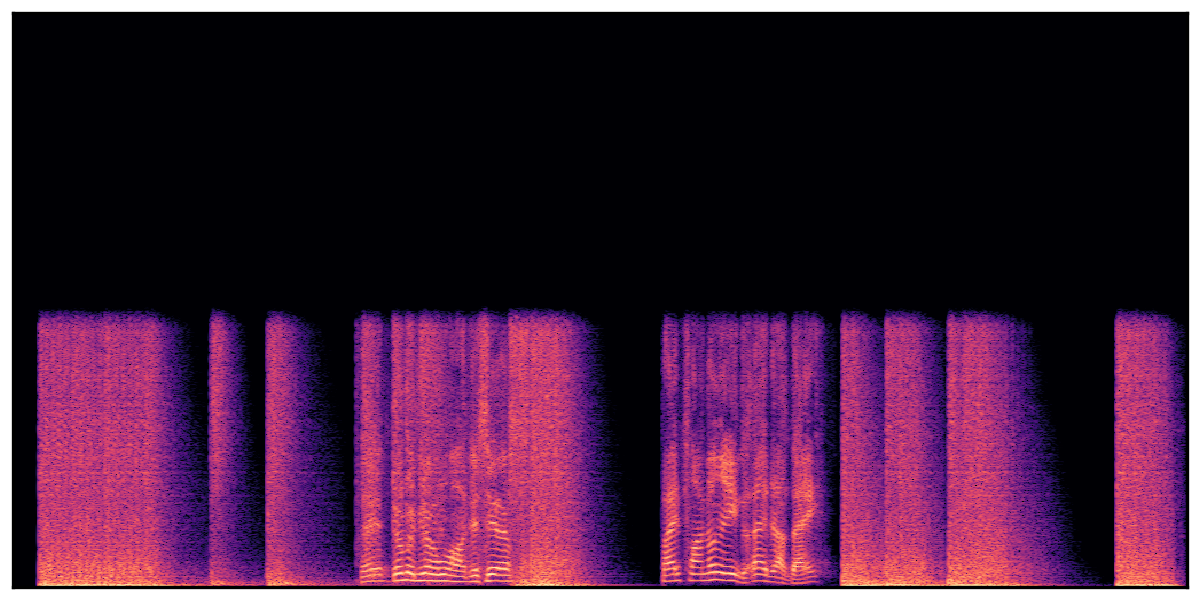

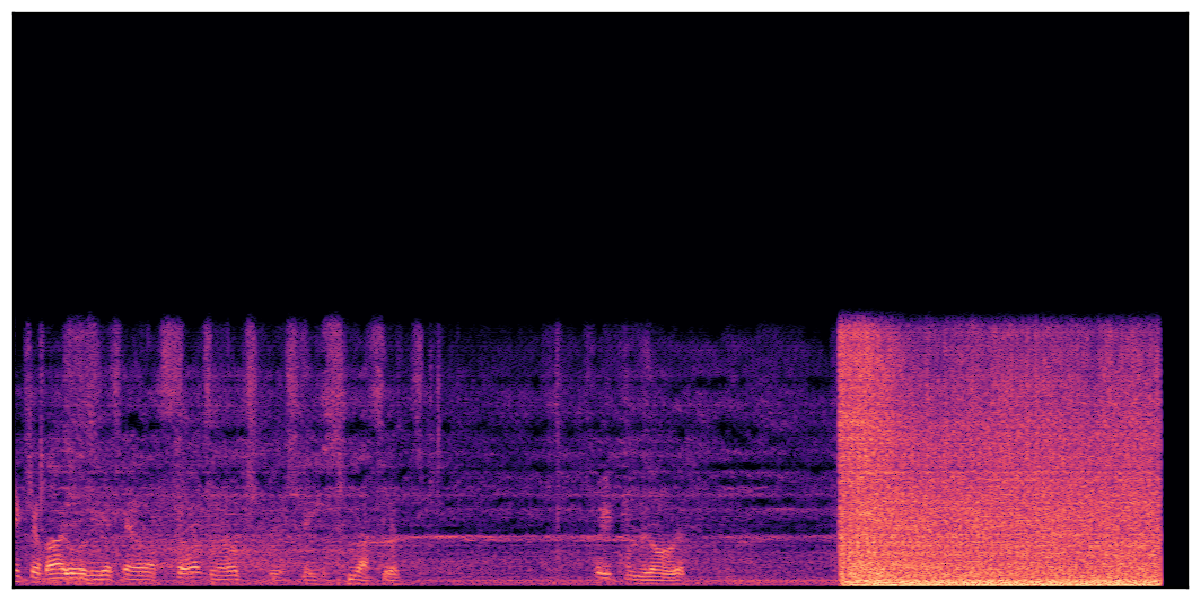

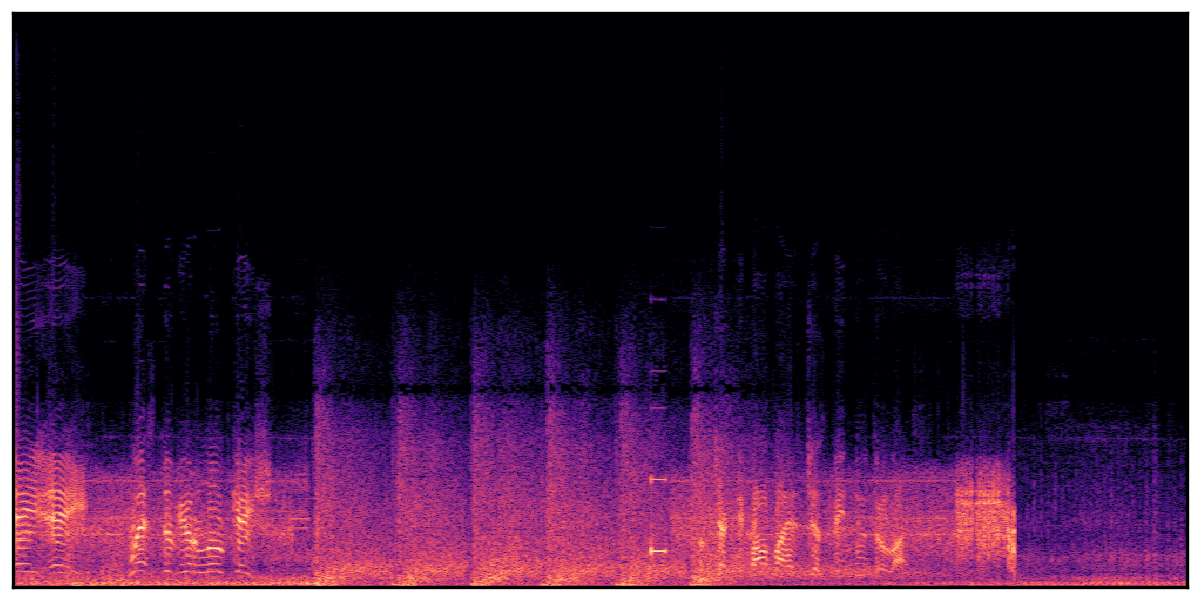

(a) Generated audio clips by WavJourney

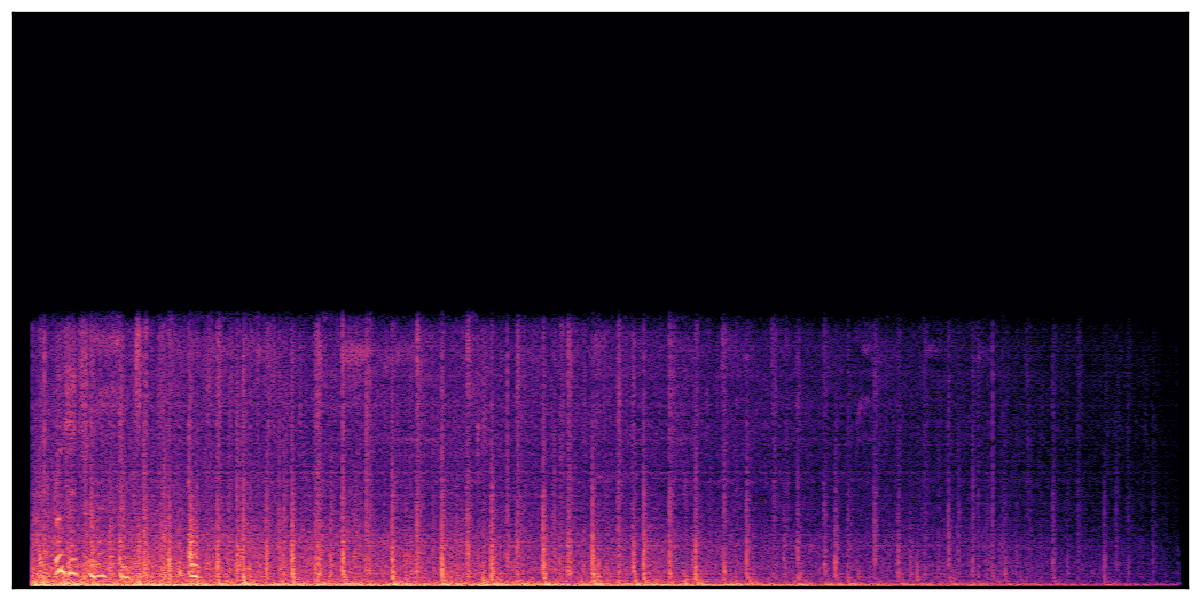

(b) Generated audio clips by AudioLDM.

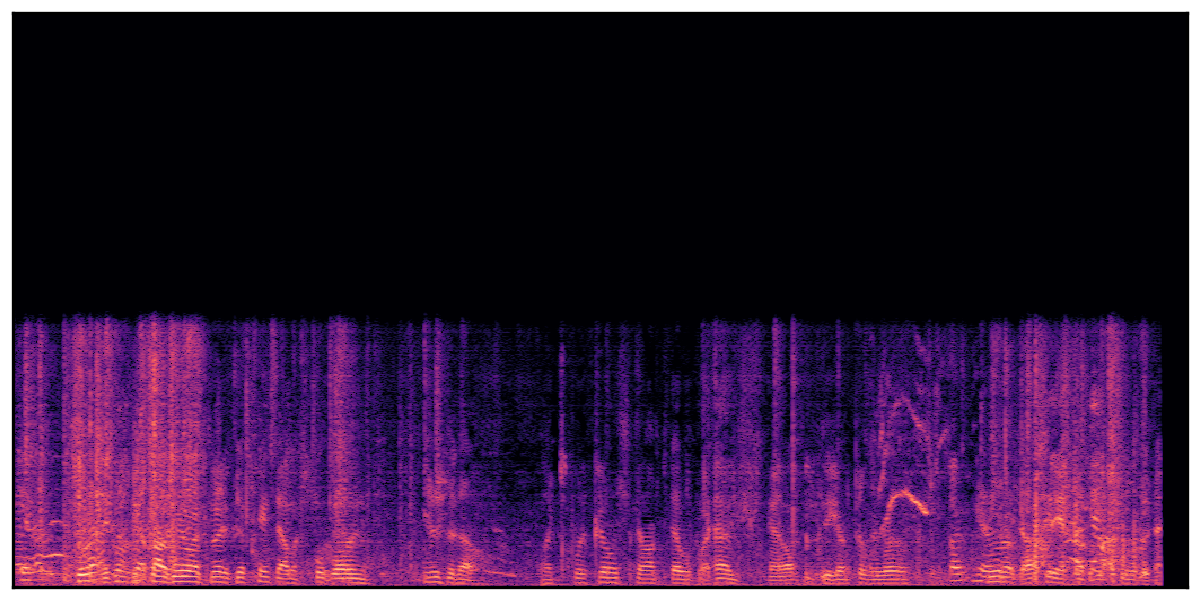

(c) Generated audio clips by Tango.

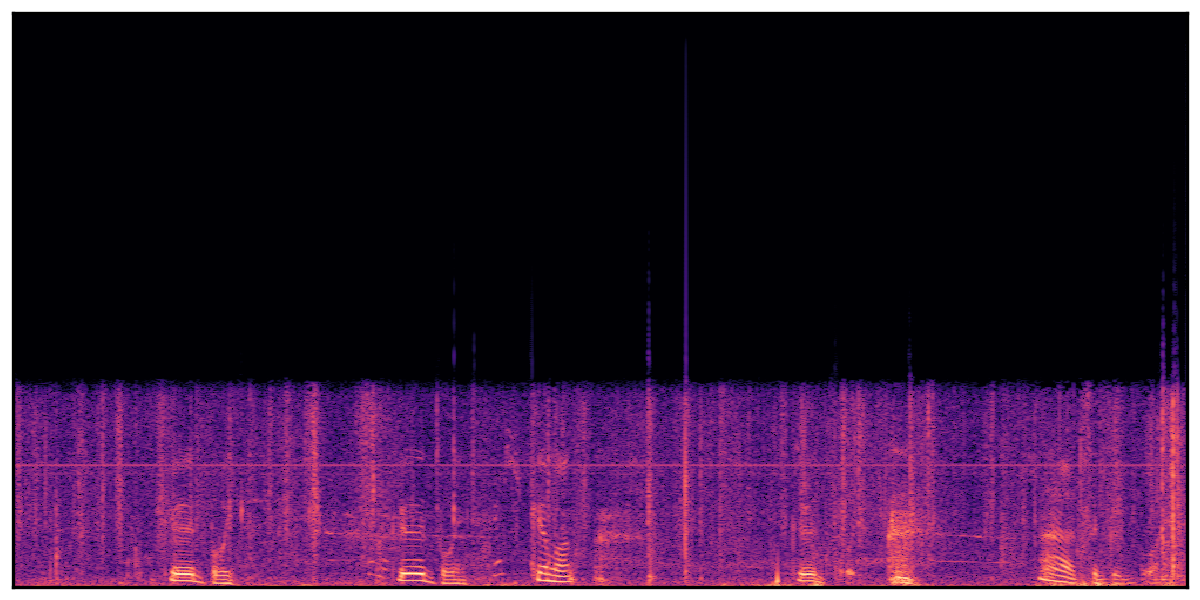

(d) Ground truth audio in AudioCaps dataset.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Xubo Liu1,

Zhongkai Zhu2,

Haohe Liu1,

Yi Yuan1,

Meng Cui1,

Qiushi Huang1,

Jinhua Liang3, Yin Cao4, Qiuqiang Kong5, Mark D. Plumbley1, Wenwu Wang1 |

|

1University of Surrey, 2Independent Researcher, 3Queen Mary University of London, 4Xian Jiaotong Liverpool University, 5The Chinese University of Hong Kong |

|

Large Language Models (LLMs) have shown great promise in integrating diverse expert models to tackle intricate language and vision tasks. Despite their significance in advancing the field of Artificial Intelligence Generated Content (AIGC), their potential in intelligent audio content creation remains unexplored. In this work, we tackle the problem of creating audio content with storylines encompassing speech, music, and sound effects, guided by text instructions. We present WavJourney, a system that leverages LLMs to connect various audio models for audio content generation. Given a text description of an auditory scene, WavJourney first prompts LLMs to generate a structured script dedicated to audio storytelling. The audio script incorporates diverse audio elements, organized based on their spatio-temporal relationships. As a conceptual representation of audio, the audio script provides an interactive and interpretable rationale for human engagement. Afterward, the audio script is fed into a script compiler, converting it into a computer program. Each line of the program calls a task-specific audio generation model or computational operation function (e.g., concatenate, mix). The computer program is then executed to obtain an explainable solution for audio generation. We demonstrate the practicality of WavJourney across diverse real-world scenarios, including science fiction, education, and radio play. The explainable and interactive design of WavJourney fosters human-machine co-creation in multi-round dialogues, enhancing creative control and adaptability in audio production. WavJourney audiolizes the human imagination, opening up new avenues for creativity in multimedia content creation. |

|

Instruction: Generate an audio in Science Fiction theme: Mars News reporting that Humans send light-speed probe to Alpha Centauri. Start with news anchor, followed by a reporter interviewing a chief engineer from an organization that built this probe, founded by United Earth and Mars Government, and end with the news anchor again. |

|

Instruction: Generate a one-minute introduction to quantum mechanics by a professor. |

|

Instruction: Generate a fictional radio show: "In the bustling artistic landscape of 1920s Paris, a local surrealist artist vanishes without a trace, leaving the community in a state of anxious speculation. The broadcast delves into this perplexing disappearance, exploring its impact on the bohemian circles frequenting the famed nightclub, Le Chat Noir. Tune in for a captivating minute of news that uncovers the layers of mystery shrouding the City of Lights. From police bafflement to public intrigue, we bring you the latest on this enigmatic tale that has both captivated and confounded Parisians. Hosted by Edward Thompson for the BBC World Service, this broadcast serves as a haunting reminder of the secrets that lurk in the corners of artistic brilliance and nocturnal Paris." The images were generated by Midjourney. The script was generated by ChatGPT 4. Video made by Jeff Barry. |

|

Sci-Fi: ''Universal translators malfunction; humans and aliens bond over shared melodies.

'' |

|||||

|

|

|

|

|

|

|

|

|

Travel Exploration: ''Peru's Andes peaks whisper tales of the Inca, where golden cities once stood.

'' |

|||||

|

|

|

|

|

|

|

|

|

Romantic Drama: ''Secrets whispered, emotions swell, two hearts navigating love's turbulent sea.

'' |

|||||

|

|

|

|

|

|

|

|

|

Radio Play: ''Seated by the window, rain outside, Kate listens to Jake's poetry.

'' |

|||||

|

|

|

|

|

|

|

|

|

Education: ''Soundscapes and Symphonies: Introduction to Music Theory and Composition.

'' |

|||||

|

|

|

|

|

|

|

|

|

We present audio samples generated by WavJourney in comparison to SOTA text-to-audio generation methods on AudioCaps dataset. WavJourney demonstrates superior performance over SOTA methods, particularly when conditioned on intricate text descriptions. It even stands on par with the ground truth. The compositional design enables WavJourney to model the complex spatio-temporal acoustic relationships among multiple sounds.

There are four audio clips for each row: (a) Generated audio clips by WavJourney (b) Generated audio clips by AudioLDM. (c) Generated audio clips by Tango. (d) Ground truth audio in AudioCaps dataset. |

|||||||||||

|

Audio Caption: ''A man talking followed by a goat baaing then a metal gate sliding while ducks quack and wind blows into a microphone '' |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Audio Caption: ''A train running on a railroad track followed by a vehicle door closing and a man talking in the distance while a train horn honks and railroad crossing warning signals ring

'' |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Audio Caption: ''A man speaking followed by a woman talking then plastic clacking as footsteps walk on grass and a rooster crows in the distance '' |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Audio Caption: ''A man speaking over an intercom as a helicopter engine runs followed by several gunshots firing '' |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|